How We Talk

1. It Starts with "Hello"

Imagine you are sitting in a coffee shop. Two friends, Alex and Jordan, are catching up at the table next to you. It looks effortless. They are laughing, interrupting each other, and sharing stories.

But if you look closer, something amazing is happening.

The Coffee Shop Scene

Alex starts telling a story about his weekend. It’s not a monologue. It’s a collaboration.

Alex: "So I was driving to the coast, and—"

Jordan: (interrupting excitedly) "Oh, the one near Big Sur?"

Alex: "Exactly! And the fog was so thick I could barely see the hood of my car."

Jordan: "Mm-hm, mm-hm." (Taking a sip of coffee, nodding)

Alex: "And suddenly, this deer just—"

Jordan: "No way."

Jordan didn't wait for Alex to finish his sentence to ask about Big Sur. He predicted where the sentence was going and jumped in to confirm context. Alex didn't stop; he used Jordan's interruption as a springboard. This "barge-in" didn't break the flow; it accelerated it.

The High-Stakes Dance: Returning a Toaster

Now, let's look at something more transactional. A customer, Sam, is calling support about a broken toaster. He is frustrated. The agent, Lisa, has to manage his emotions while getting facts.

Sam: "I bought this toaster, and honestly, it just—look, I know it's past the 30 days but—"

Lisa: "I understa—"

Sam: (Overlapping) "—it sparks! Like actual sparks! My kids were in the kitchen."

Lisa: "Oh, wow. Okay, sparks are a safety issue. We can definitely help with that regardless of the date."

Sam: (Exhales, relieved) "Okay. great. Because I was worried..."

Notice the dance here:

The Interruption: Sam interrupts Lisa's "I understand" because his emotional need (safety concern) hasn't been heard yet.

The Pivot: Lisa immediately stops her script. She acknowledges the "sparks" (new critical information) and shifts tone from policy-check to safety-check.

The Release: Sam hears this shift and finally yields the floor ("Exhales, relieved").

More Than Ping-Pong

We often think of conversation like a game of ping-pong. I hit the ball (I speak), you wait for it to land, then you hit it back (you speak).

But human conversation is not ping-pong. It is a dance. In ping-pong, if two people hit the ball at the same time, the game breaks. In a conversation, if two people speak at the same time, it is often necessary.

We don't wait for complete silence to start our turn. We predict when the other person is done. We bargain for the "floor" with a breath or a glance. It is messy, chaotic, and beautiful. And as we will see, it is incredibly hard to teach a machine to do it.

2. The Machine Inside Us

Conversation feels like magic—a seamless flow of ideas between two minds. But if you peel back the layers, it isn't magic at all. It's a protocol. A rigorous, invisible set of rules we call the Conversation State Machine. As humans, we learn this from experience. Machines, unfortunately, need to be taught.

Think of it as the operating system for human interaction. Its job is to manage the messiness of the real world. It handles the "grammatically irrelevant" chaos that no textbook warns you about: memory lapses, buzzing phones, sudden noises, and awkward misunderstandings.

When we speak, we aren't just sending words. We are syncing our internal state machines with one another. We lock into a shared loop that dictates exactly when to speak, when to listen, and when to just hold space.

Hacking the Gaps

Consider the "grammatically irrelevant" condition of Thinking. You are answering a question, and you need a second to remember a name. If you just stop talking, the other person’s state machine will trigger a "Turn Complete" event and they will start speaking.

So what do you do? You hack the machine. You say "Ummm..." or "You know..."

Support Agent: "Can I get your order number?"

Customer: "Sure, it is... umm... let me check... ah, yes, here it is."

That "umm... let me check" is not junk data. It is a Keep Alive signal. It tells the other person's state machine: "I am entering a Thinking State. Do not transition to Speaking State yet. I still hold the floor."

The "Wait" State: Handling Distractions

Imagine talking to a friend who suddenly looks at their buzzing phone.

Sales Rep: "So this software plan includes..."

Customer: (Turns head, yells to background) "Bobby! Stop hitting your sister!"

Sales Rep: (Stops immediately, waits)

Customer: "Sorry about that. You were saying?"

Sales Rep: "No problem. I was saying the plan includes..."

Your State Machine: Detects Attention Shift -> Transitions to "Paused/Waiting" -> Stops Speaking.

Their State Machine: Handles Distraction -> Re-engages -> Signals "Ready".

Your State Machine: Resume Speaking.

We do this error correction automatically. If we didn't, we would just keep talking while they weren't listening, and the shared structure would collapse. The state machine ensures we are always synchronized, even when the world around us is noisy and distracting.

The Collision: Handling Overlap

Sometimes, the synchronization fails. We both predict a pause and start speaking at the exact same time.

Alex: "I think we should—"

Jordan: (Simultaneously) "Did you see—"

Both: (Stop abruptly)

Alex: (Stays Silent)

Jordan: "No, you first."

State Machine: Detect Collision -> Abort Speaking -> Negotiate Priority -> Yield. We don't crash. We pause, signal a "Yield", and repair the conversational state instantly. This negotiation happens in milliseconds, often without us even realizing we are doing it.

The Noise Filter: Signal vs. Sound

Your state machine is also an incredible audio engineer. It constantly filters out the world to focus on the voice.

Sam: "I'm calling about my..."

Background: (Background noise with actual words from the TV nearby)

Agent: (Stays listening, does not interrupt)

Sam: "...credit card bill."

State Machine: Detect different sound -> Classify as Non-Speech -> Maintain Listening State. If the machine treated every loud sound as a "Turn Start", it would interrupt you every time a door slammed. It has to know the difference between a voice and a truck.

3. Why Robots Fail the Test

Let's be honest: talking to Siri or Alexa in 2026 feels like trying to order a pizza via telegraph. It works, technically. But it makes you want to pull your hair out. STOP.

These "assistants" are the dinosaurs of the voice world. They come from a time when we thought AI was just a command-line interface with a mouth. They are rigid, polite, and dumb as a rock when it counts.

The Walkie-Talkie Problem

The biggest issue? They are stuck in "Walkie-Talkie Mode."

You: "Alexa, play some jazz." (Over)

Alexa: (Processing... Processing...) "Playing Jazz on Spotify." (Over)

There is zero overlap. If you interrupt them, they either crash or ignore you. It's not a conversation; it's a series of hostage demands. They simply do not have the "State Machine" we discussed above. They have a simple loop: Listen -> Wait for Silence -> Process -> Speak.

And if you change your mind mid-sentence?

You: "Siri, call Mom—actually, no, call Dad."

Siri: "Calling... Mom."

You: "NO!"

To a human, "actually, no" is a standard transition trigger. To a robot, it's just more noise parameters to fit into a pre-defined slot.

The Trap: Leading Builders Astray

The real tragedy isn't that these old bots are bad. It's that they tricked an entire generation of Product Managers and Developers into thinking this is how it's supposed to work.

We spent a decade optimizing for "Keyword Recognition" and "Command Fulfillment." We built better telegraphs. Voice AI creators look at Alexa and think, "I need to make my bot respond 10% faster." No. You don't need a faster horse. You need to stop treating conversation like a transaction.

If you build an AI agent today using the "Request-Response" model of the 2010s, you aren't building a Digital Employee. You are building a fancy IVR menu that frustrates your customers. You are ignoring the messy, beautiful reality of the human state machine.

4. Designing the "Human" AI

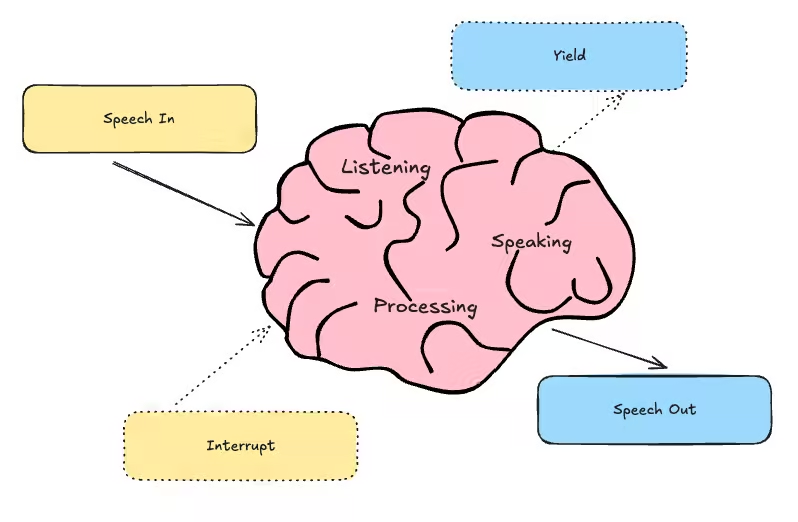

At Oration, we didn't just build a chatbot that waits for text. We reverse-engineered the human conversation state machine. We explicitly codified the states of Listening, Speaking, Thinking, and Interrupting to give our AI the same social instincts as a human.

The Instinct to Listen: "Acoustic vs. Semantic"

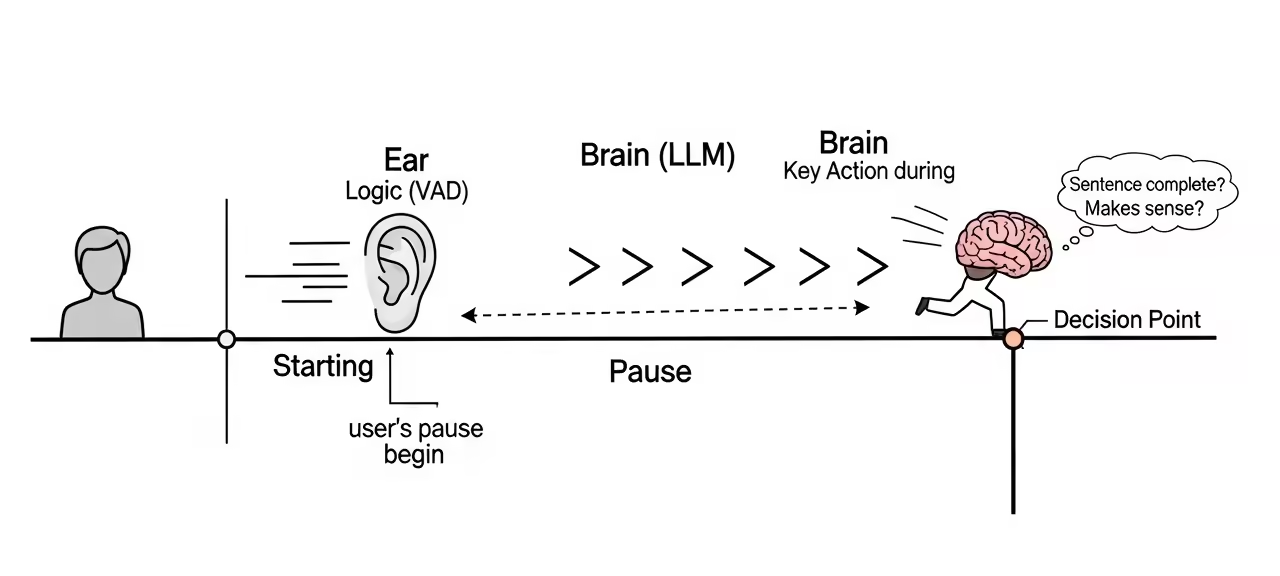

In a standard bot, "Silence = End of Turn." That’s why they cut you off when you pause to think. We built a dedicated Turn Determination Machine. It treats listening as a full-time job. It constantly weighs two competing signals:

The Acoustic Signal (The Ear): This is the raw sound waveform. It detects Voice Activity (VAD). It tells the system, "The user stopped making noise."

The Semantic Signal (The Brain): This analyzes the meaning of the words. It asks, "Is this sentence complete? Does it make sense to stop here?"

The Magic in the "Processing" State

When you stop speaking, our machine enters a high-speed Processing state. The "Ear" reports silence. But the "Brain" might shout, "Wait! He said 'and then...', that's a comma, not a period!"

Agent: "What's the issue with your billing?"

Customer: "Well, I looked at my statement..." (Silence: 800ms)

Classic Bot: (Interrupts) "I can help with billing."

Agent: (Waits... Semantic incomplete)

Customer: "...and I saw a charge I didn't recognize."

The machine triggers a turn-incomplete event. Instead of replying, the AI waits. It effectively holds the floor for you, knowing you are just catching your breath.

The Instinct to Yield: Handling Interruptions

In the AgentSpeaking state, the AI is not just blasting audio. It keeps its "ears" open.

We implemented a Barge-In handler. If the user speaks while the agent is talking (User Message In), the system triggers a handleInterruption event.

But it’s smart. It checks isInterruptionValid to avoid being timid.

Agent: "I can definitely renew that policy for you. It covers—"

Customer: "Mm-hm." (Backchannel)

Agent: "—accidental damage and theft. Would you like to proceed?"

Background noise? (Acoustic only) -> Ignore. Keep speaking.

"Mm-hm" or "Right"? (Backchannel) -> Acknowledge signals but keep usage.

"Wait, stop!" (True Interrupt) -> Immediately cut audio, transition to

AgentListening, and handle the new context.

The Instinct to Hold: The Art of Thinking

Sometimes, the AI needs to fetch data (e.g., checking order status). In a typical bot, this is dead air. The user thinks the call dropped.

We created an AgentWorking state. When the AI goes to fetch data, it transitions here.

Customer: "Can you check if the size 10 is in stock?"

Agent: "Let me check that for you..." (Filler audio plays while API loads)

Agent: (3 seconds later) "Still looking it up, one second..."

Agent: "Okay, found it! We have one pair left."

Immediate reaction: It plays a filler: "Let me check that for you..."

Delayed reaction: If the API is slow, it loops back with: "Still looking it up, one second..." This matches the human "Keep Alive" signal we discussed in Section 2. It holds the floor and maintains the connection, even when no "real" information is being exchanged.

5. The Future: Conversations, Not Commands

The switch from "Command" to "Conversation" changes everything. When an AI can hold the state of a conversation, it stops being a tool you use and starts being a partner you work with. You stop barking orders ("Turn on lights") and start exploring ideas ("Help me figure out why my bill is so high").

It's Happening Now

This isn't just theory or a distant dream. The entire Voice AI industry is waking up to this reality. We are moving away from the rigid "Command-and-Control" bots of the last decade and entering an era of Dynamic Conversation.

Researchers and engineers across the globe are now treating conversation not as a text processing task, but as a real-time, multi-modal dance. We are seeing models that can "hear" a sigh, "feel" the hesitation in a pause, and understand that silence is often just as loud as words.

A New Era of Interaction

We are uncovering the hidden rules of human speech one nuance at a time. It is an endless cycle of Experiment -> Analyze -> Adapt. As we feed these learnings back into our state machines, the line between "talking to a computer" and "talking to a helper" will start to blur.

Try It Yourself

You don't have to take my word for it. You can see this complicated, messy, beautiful machine in action today. Go to oration.ai and talk to one of our agents. Interrupt it. Mumble. Change your mind. See if it can keep up with the dance.

Because the future of AI isn't about writing better code. It's about understanding how we talk.